背景:

最近在做情报相关的工作,涉及到很多的爬虫、存储、统一展示的问题,自己和团队做的产品在调度和统一管理上还是存在一些缺陷

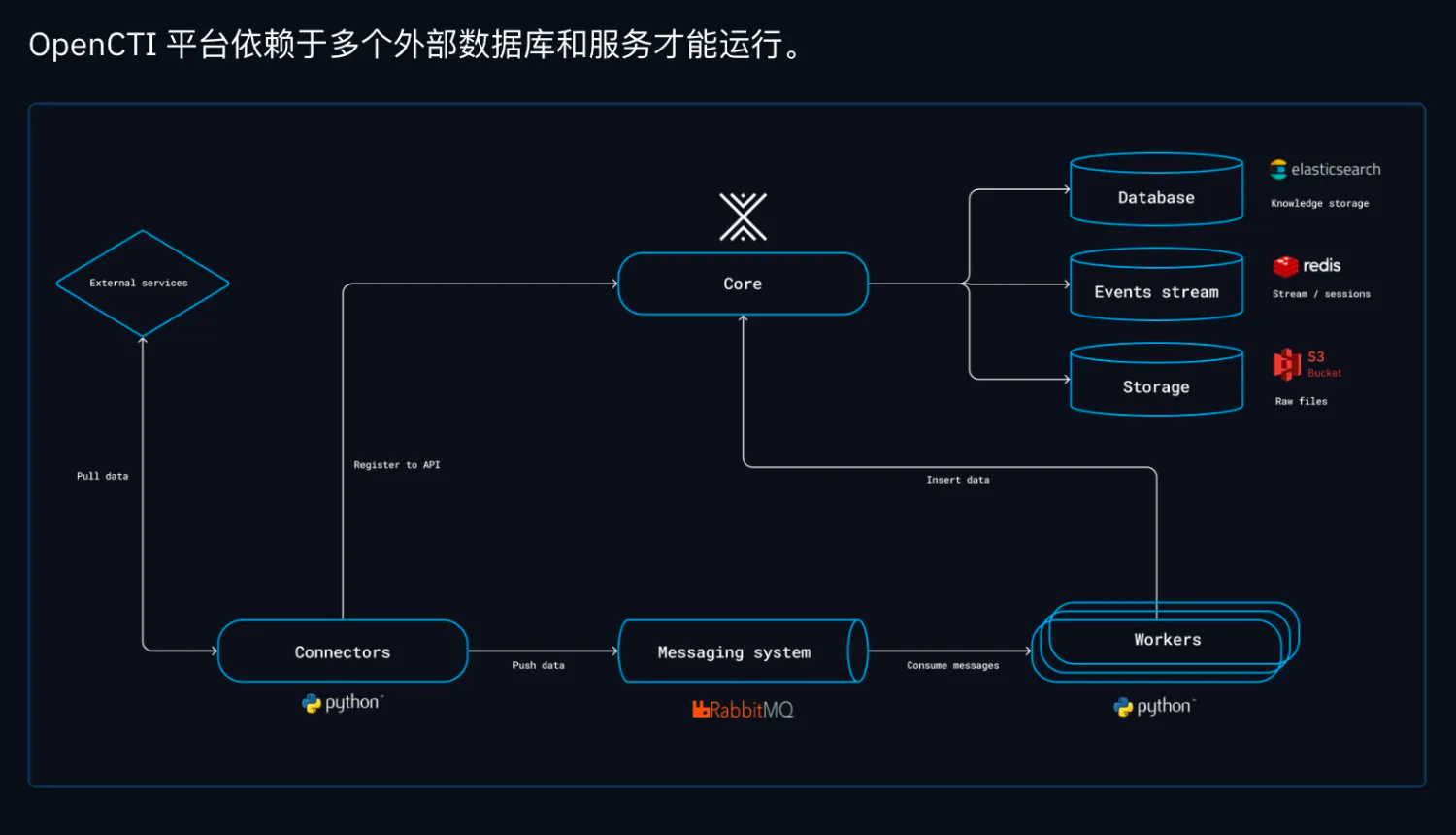

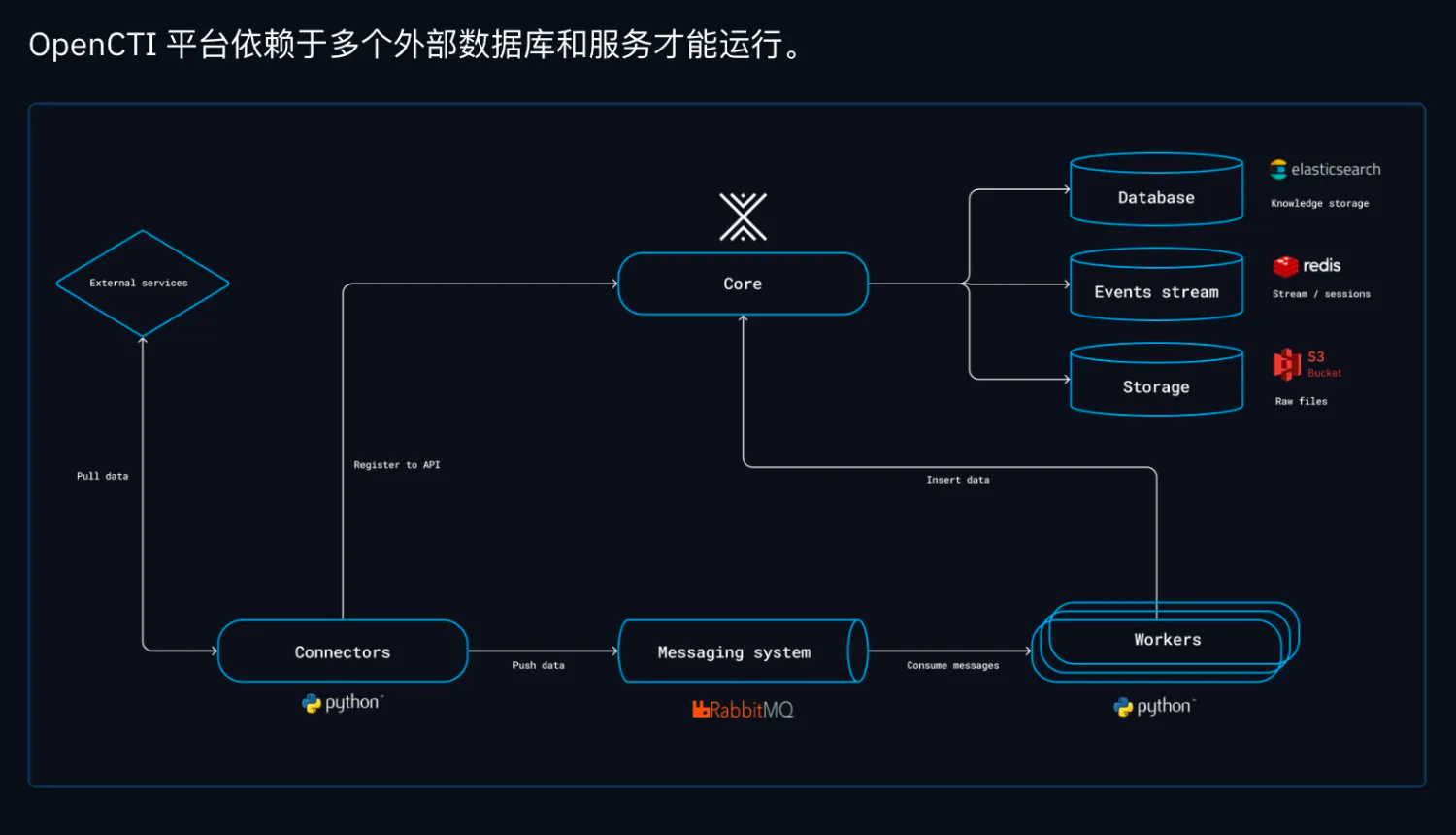

所以就想着看看有没有一些好的实践项目,能够学到一些经验和思路,更好的给产品做赋能。搜了一大圈,发现基本国外的企业和个人工作室都在用Opencti这个东西,看了一圈介绍和成熟度感觉这个项目还不错。无论是框架的热插拔设计还是后期社区的维护都挺成熟的。

那么接下来就上手试试吧!

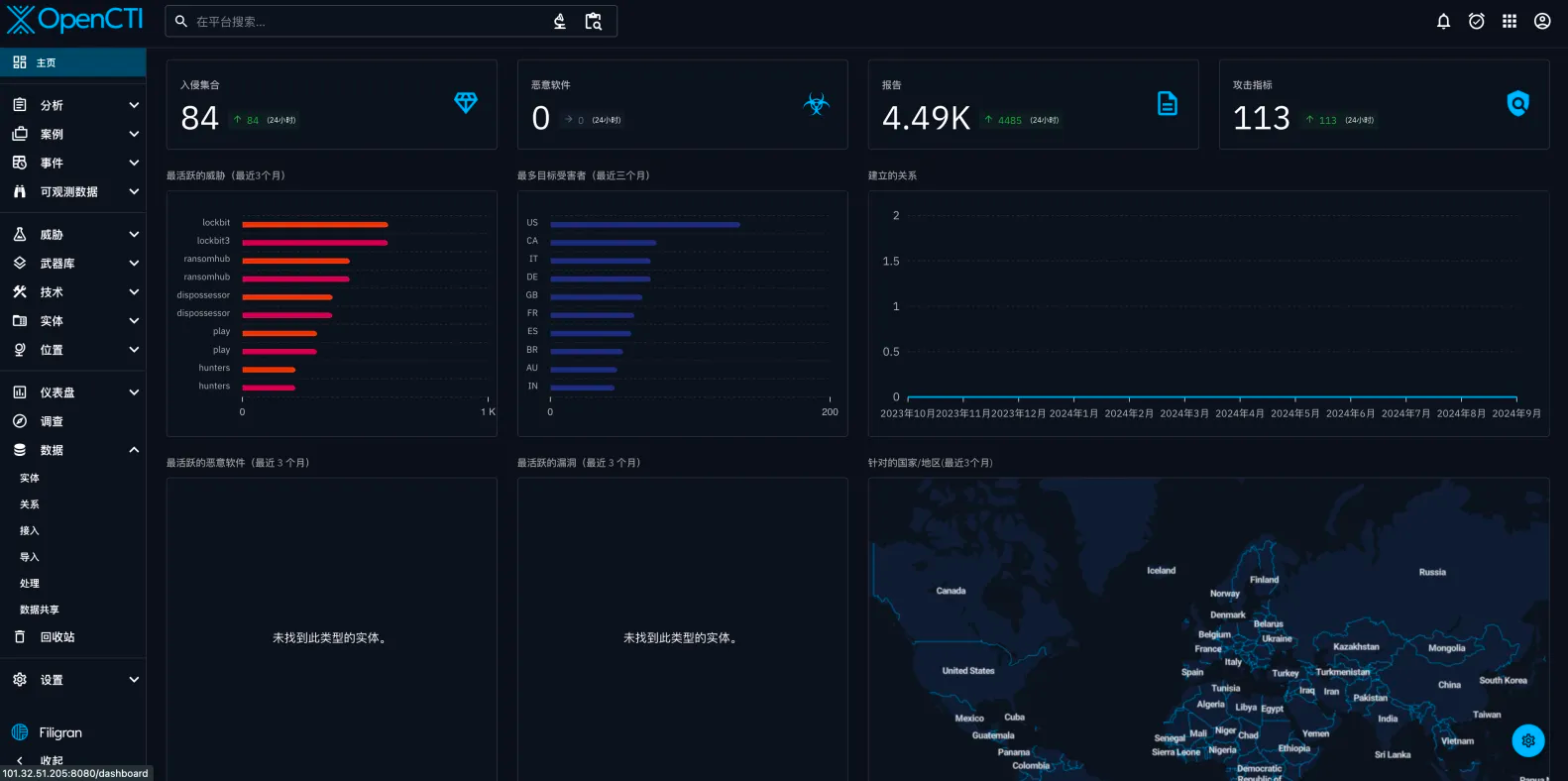

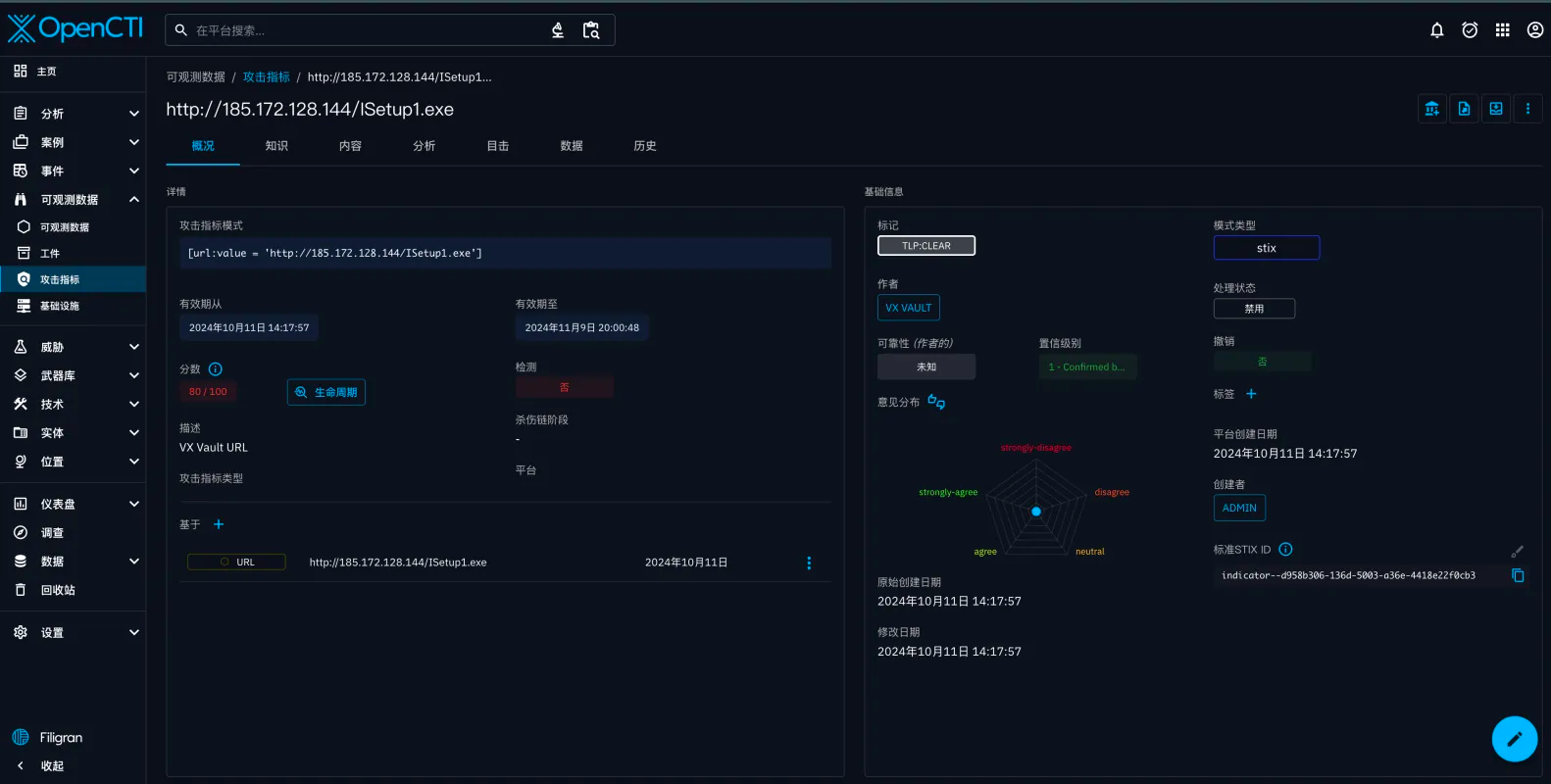

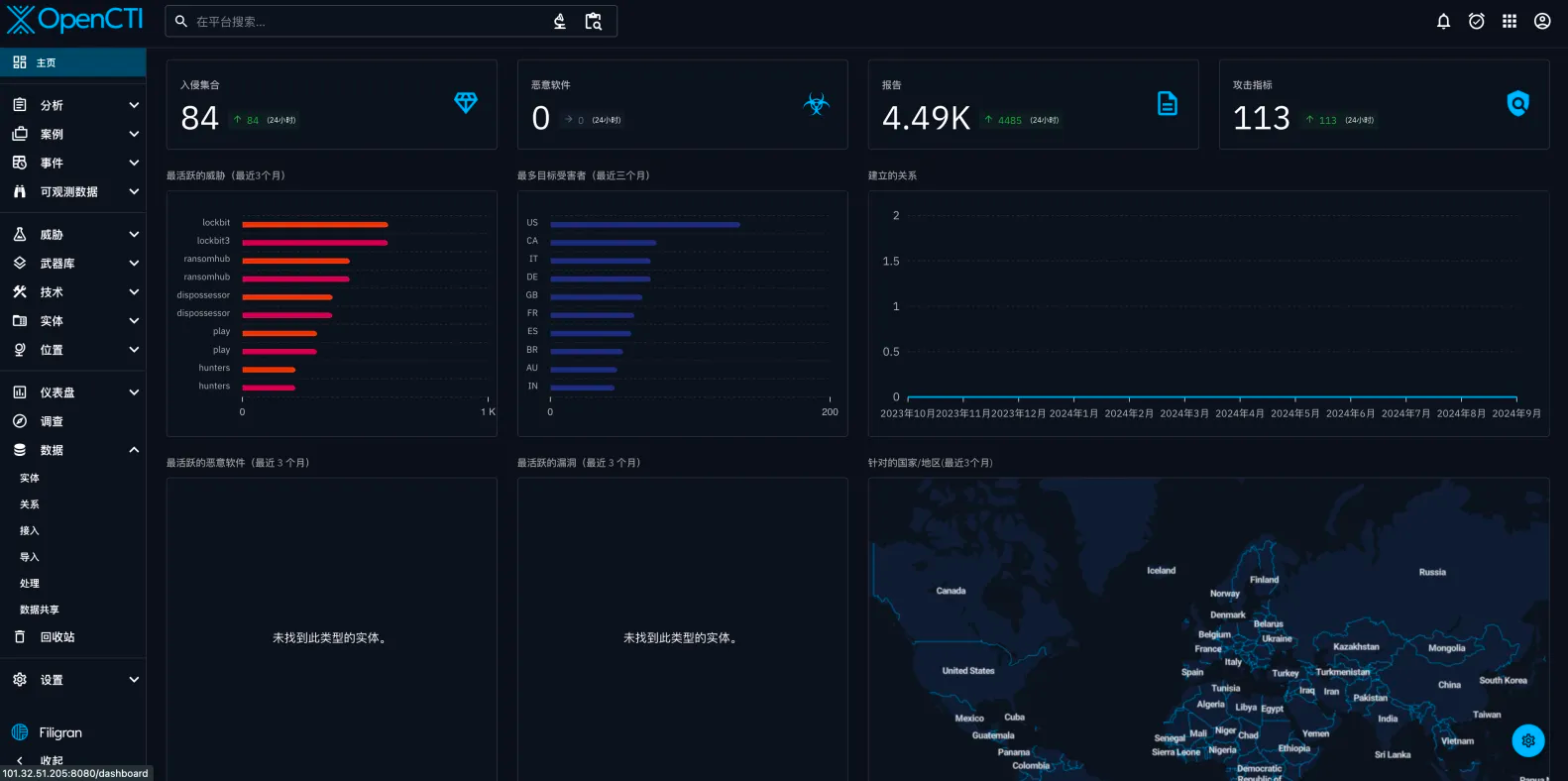

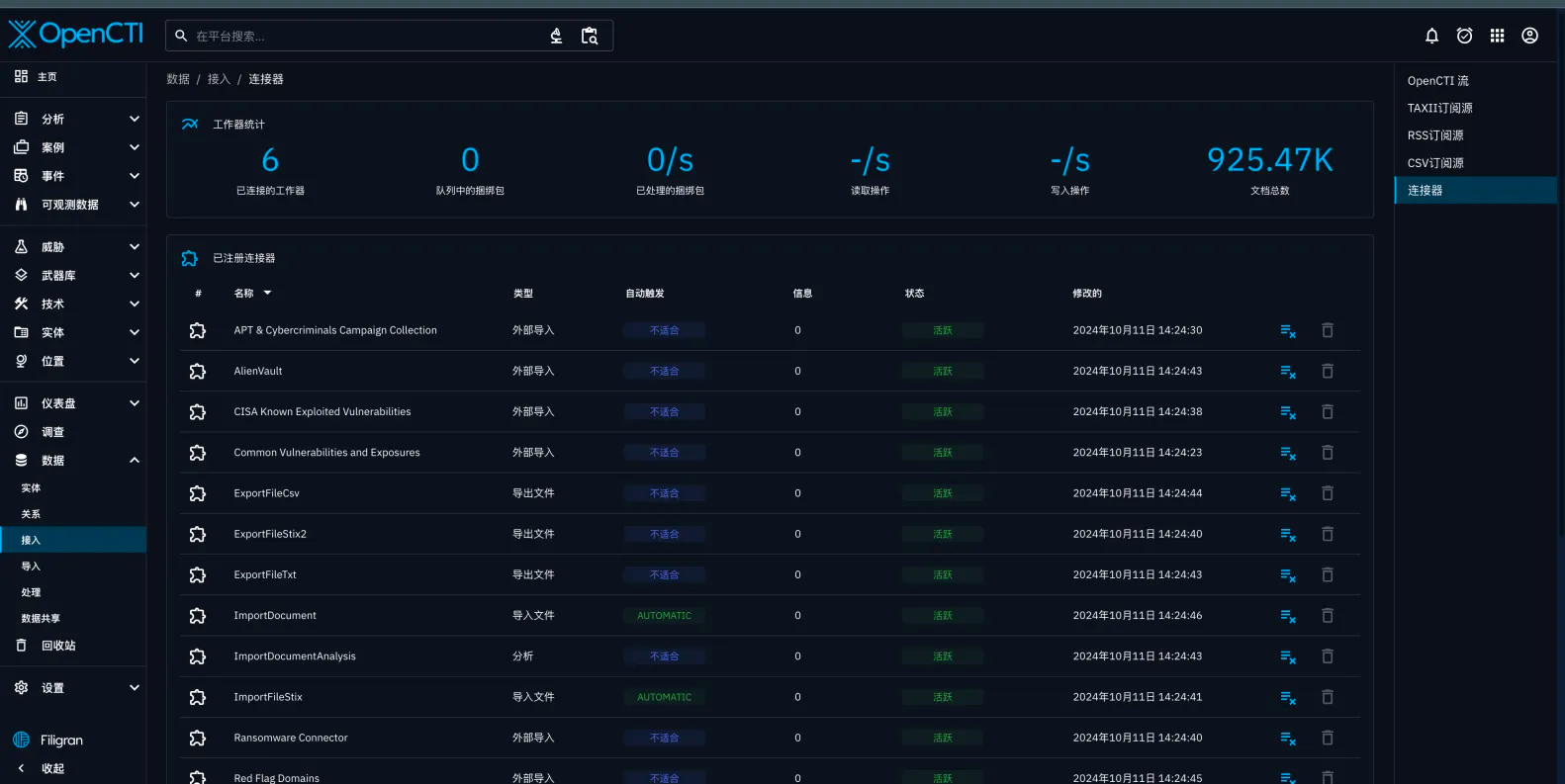

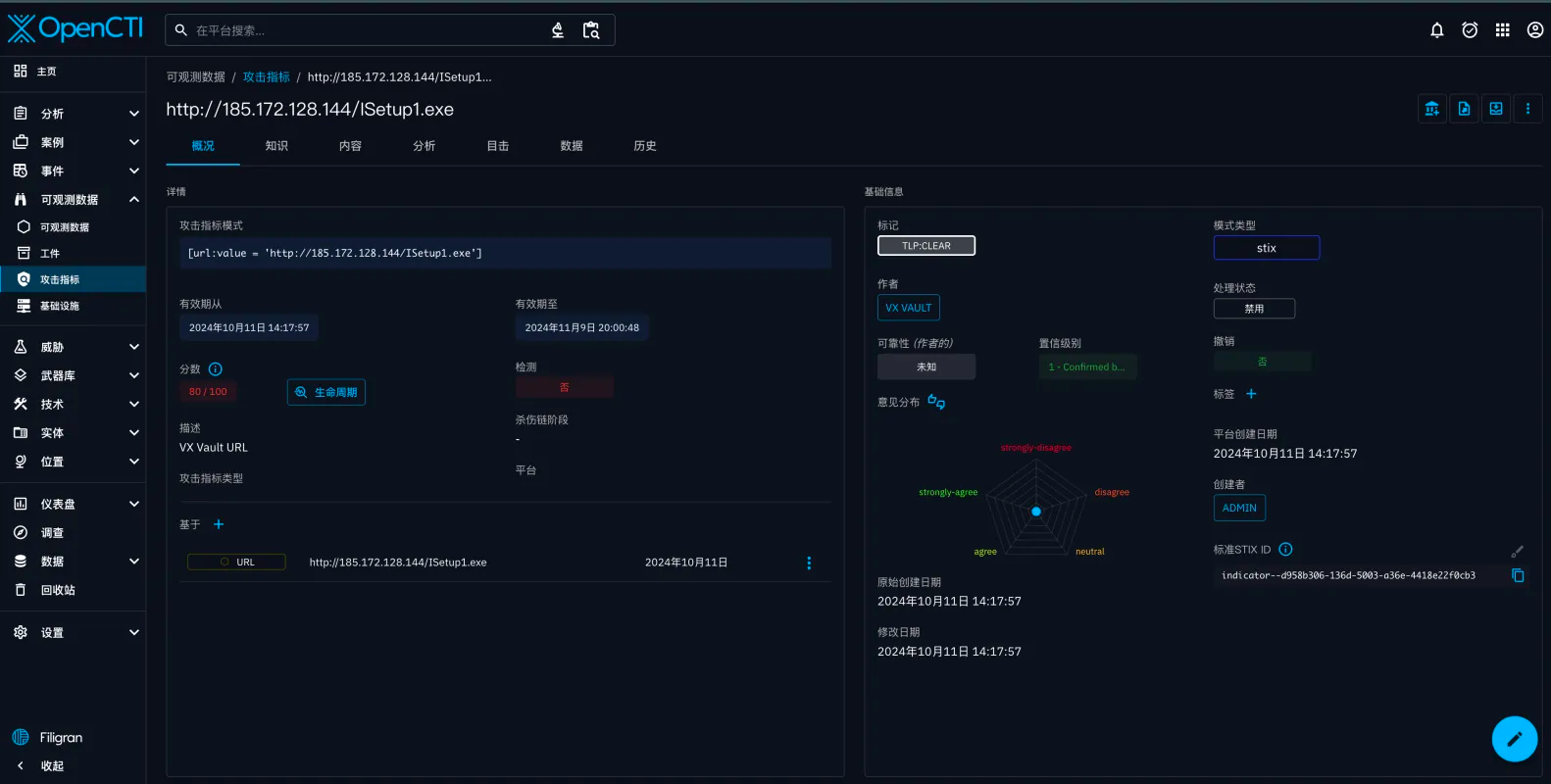

先放效果图:

前期准备:

1. 项目文档:[https://docs.opencti.io/latest/](https://docs.opencti.io/latest/)

2. 项目地址:[https://github.com/OpenCTI-Platform](https://github.com/OpenCTI-Platform)

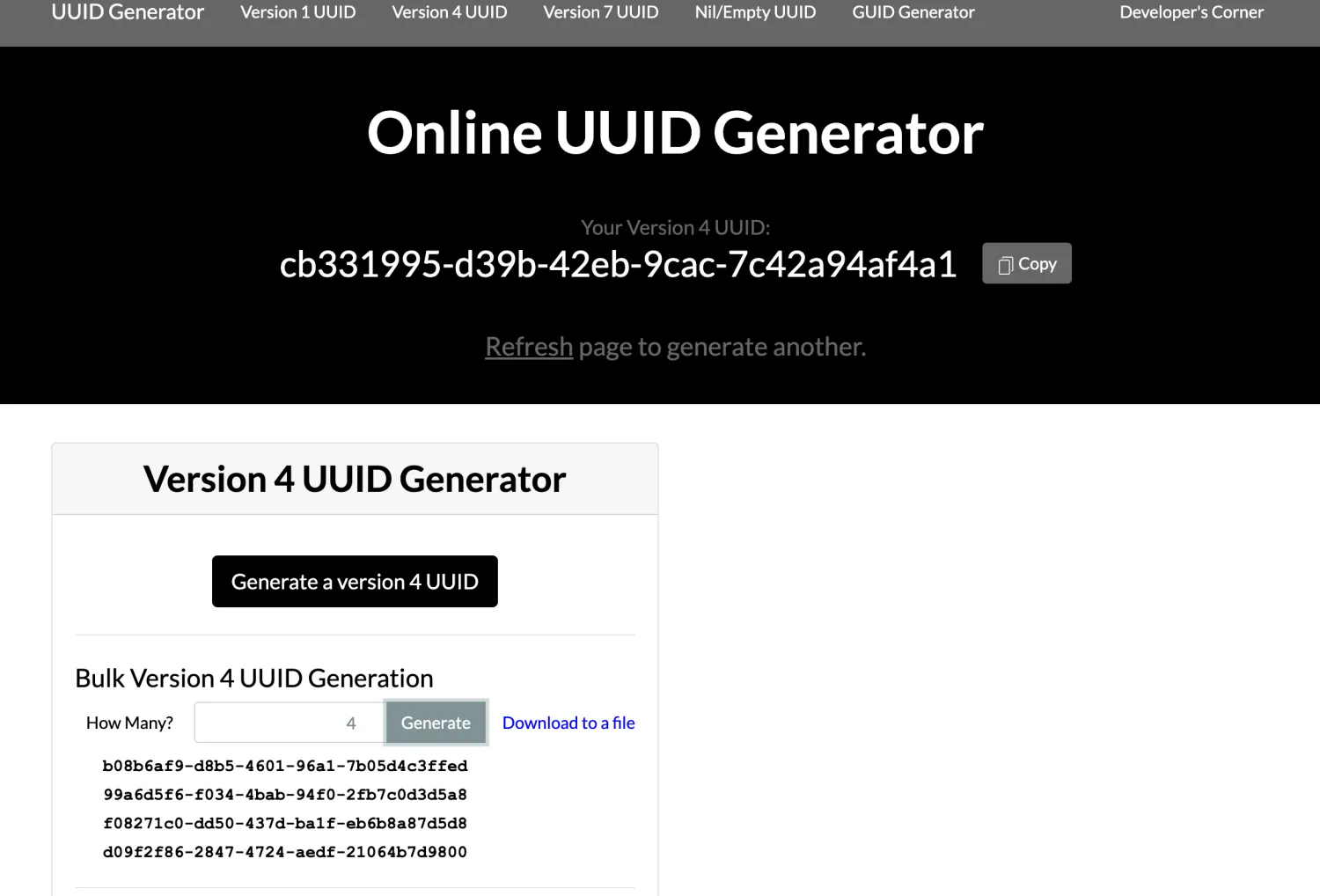

3. 用到的一个生成随机uuid的工具:[https://www.uuidgenerator.net/version4](https://www.uuidgenerator.net/version4)

先不管怎样把文档大概的过一遍,发现他有好几种安装的方式:docker、手动、云

但项目比较大,对安装的机器性能要求还是蛮高的

最少16G +300G吧

我这里是选择的腾讯云的轻量服务器搞的,后期准备实践完了装到nas上去【后期给大家出教程吧】

话不多说直接开始吧!

安装平台:

安装docker和docker-compose

直接一键脚本吧:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

| #!/bin/bash

sudo apt-get update

sudo apt-get install -y \

apt-transport-https \

ca-certificates \

curl \

gnupg \

lsb-release

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

sudo apt-get update

sudo apt-get install -y docker-ce docker-ce-cli containerd.io

sudo docker run hello-world

sudo curl -L "https://github.com/docker/compose/releases/latest/download/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

sudo chmod +x /usr/local/bin/docker-compose

docker-compose --version

echo "Docker and Docker Compose have been installed successfully."

|

在运行这个脚本之前,请确保你有足够的权限(可能需要sudo权限),并且你的系统支持这些命令。此外,这个脚本没有包含卸载旧版本Docker和Docker Compose的步骤,如果需要,你可能需要手动卸载它们。

要使用这个脚本:

- 将上述代码保存到一个文件中,例如

install_docker_compose.sh。

- 给这个文件执行权限:

chmod +x install_docker_compose.sh。

- 运行这个脚本:

sudo ./install_docker_compose.sh。

请记得在运行任何脚本之前,都要仔细阅读和理解脚本中的命令,以确保它们对你的系统是安全的。

下载安装文件

把git项目下载到本地来

1

2

3

| mkdir /home/opencti

git clone https://github.com/OpenCTI-Platform/docker.git

cd docker

|

由于ES对内存要求较高,因此需要调整机器内存参数

1

| echo"vm.max_map_count=1048575">> /etc/sysctl.conf

|

修改配置和环境变量

官网是这样写的

但我的配置如下:

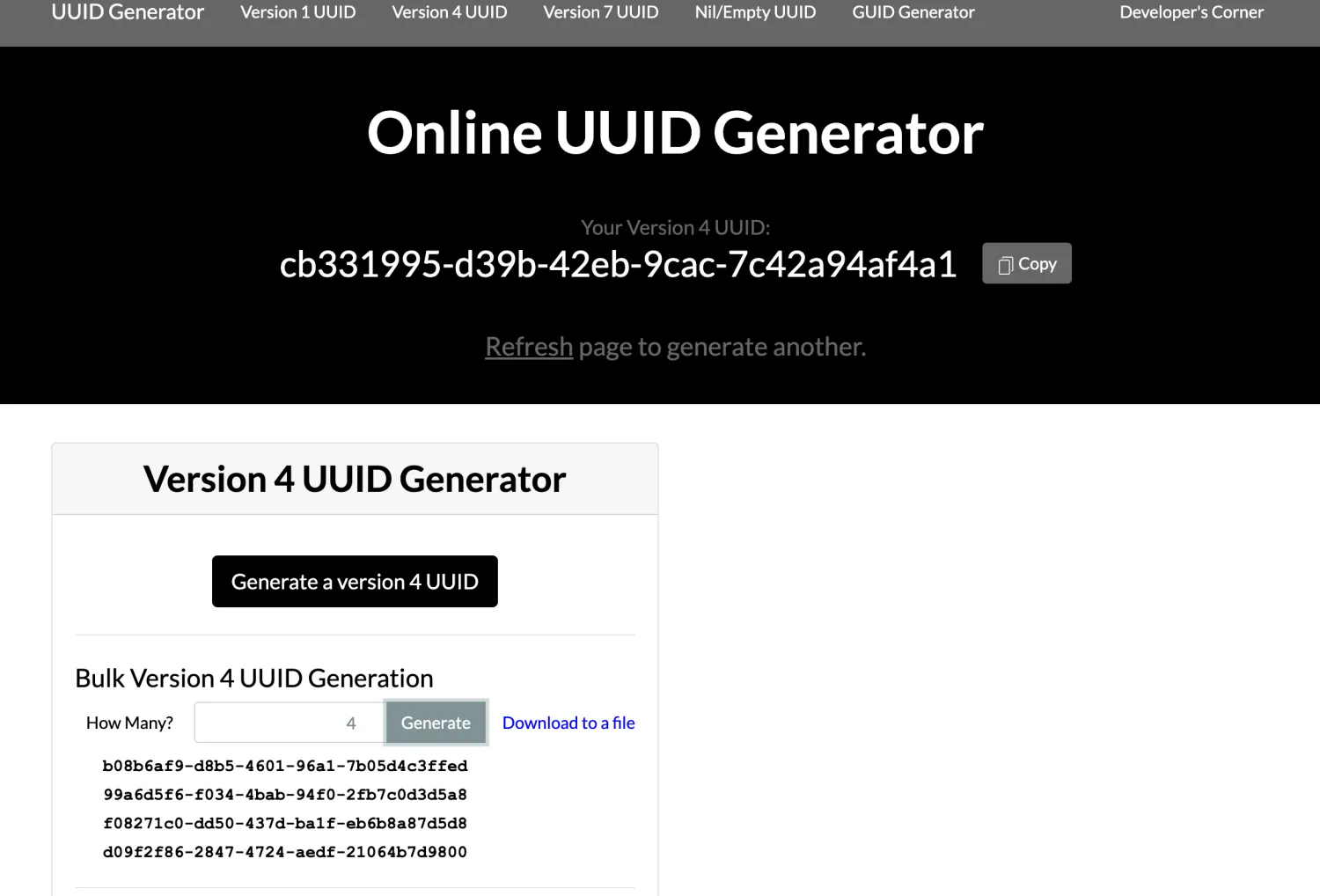

先生成自己的uuid哈

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

| OPENCTI_ADMIN_EMAIL=admin@opencti.io //这里一定要是邮箱,不然会报错

OPENCTI_ADMIN_PASSWORD=写你的密码啦

OPENCTI_ADMIN_TOKEN=************ //写你的密钥,用

OPENCTI_BASE_URL=http://0.0.0.0:8080

MINIO_ROOT_USER=******5-a3c5-2ebb2f7a4f56

MINIO_ROOT_PASSWORD=*********-b72e-0a6ebc8b3daa

RABBITMQ_DEFAULT_USER=guest

RABBITMQ_DEFAULT_PASS=guest

ELASTIC_MEMORY_SIZE=4G

CONNECTOR_HISTORY_ID=******-926e-e01ce1a43fb1

CONNECTOR_EXPORT_FILE_STIX_ID=*********6f7-b9f6-d8d113446fe7

CONNECTOR_EXPORT_FILE_CSV_ID=***********-a61b-991a4d2929ef

CONNECTOR_IMPORT_FILE_STIX_ID=***********9eba-98252de156bc

CONNECTOR_EXPORT_FILE_TXT_ID=***********-b171-c9ec67049655

CONNECTOR_IMPORT_DOCUMENT_ID=***********cf-b05e-4a72fcc80289

CONNECTOR_ANALYSIS_ID=***********78-b754-fabfd21d5985

CONNECTOR_ALIENVAULT_ID=***********-4c2a-ad7d-f1edecc70ca3

CONNECTOR_RANSOMWARE_ID=***********4-a0ff-4fe2b1f2576a

CONNECTOR_CISAKEV_ID=***********5-8ffc-205d43209ac6

OPENCTI_HEALTHCHECK_ACCESS_KEY=***********a-4e03-b79a-39771628259d

SMTP_HOSTNAME=localhost

|

这里特别注意一下,

OPENCTI_HEALTHCHECK_ACCESS_KEY一定一定不能为空

不然该容器的health检测会失败,导致连接器连接不上

启动项目

如果遇到错误了,删除容器,重新构建就可以了

1

2

| docker-compose down

docker-compose up -d

|

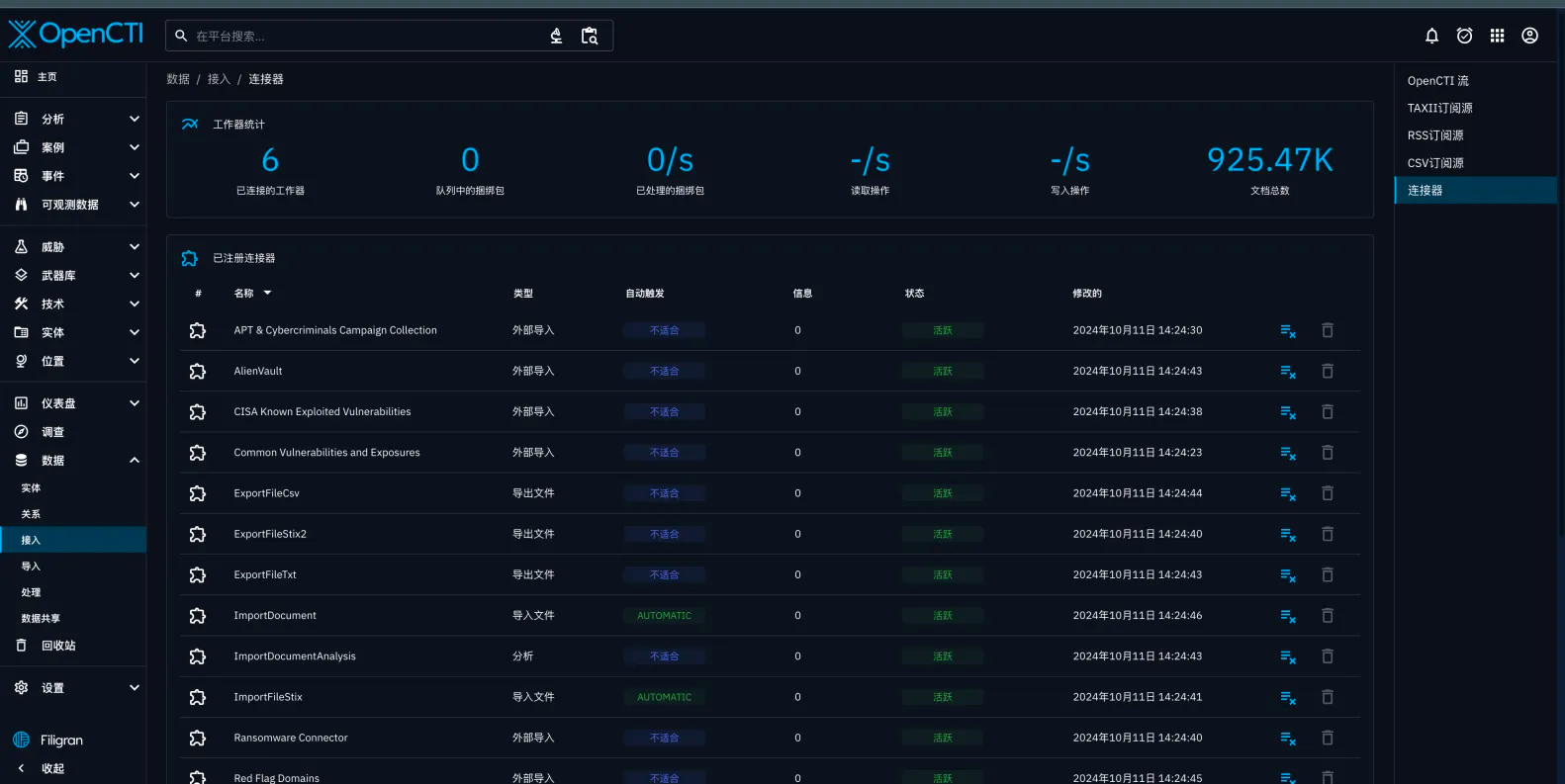

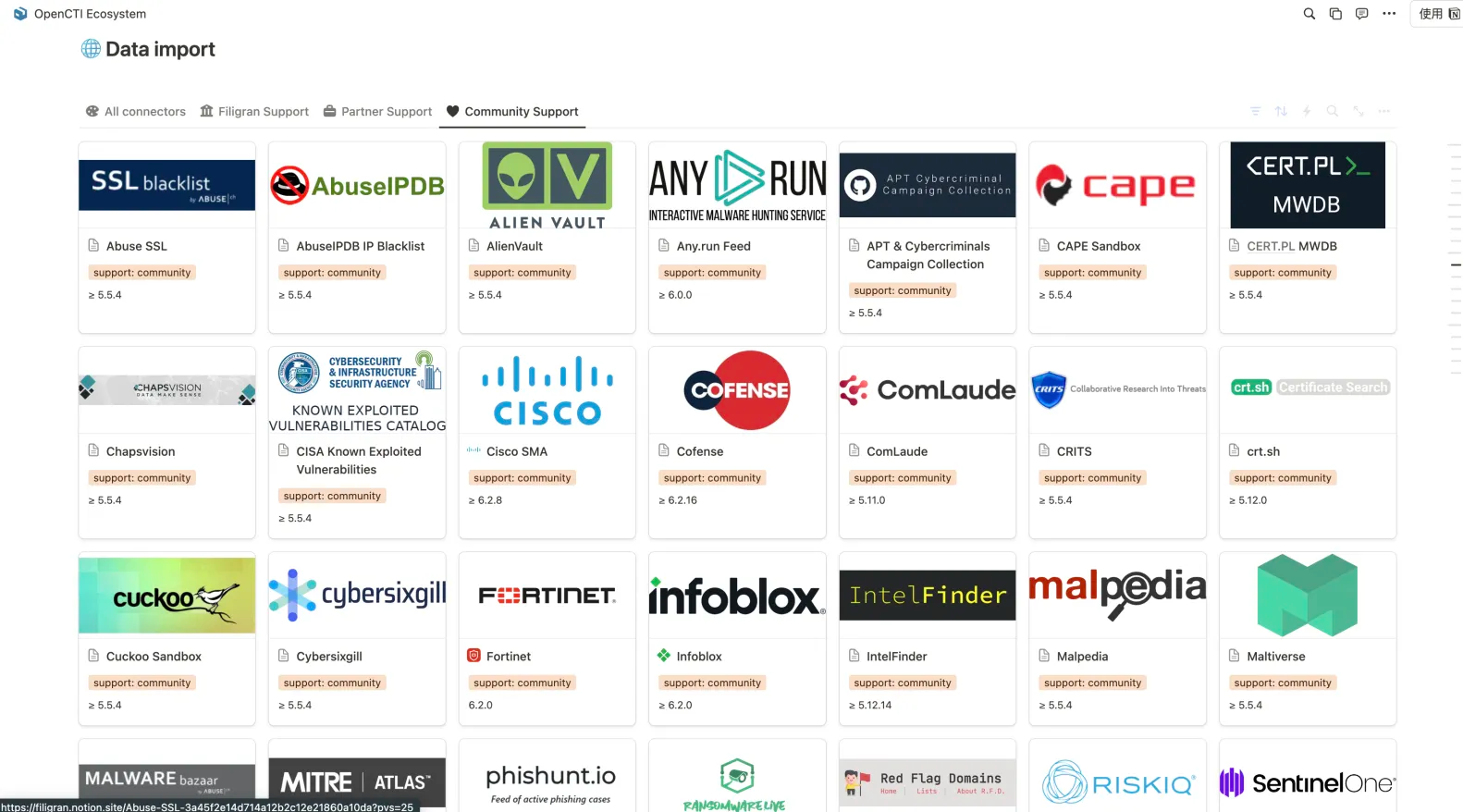

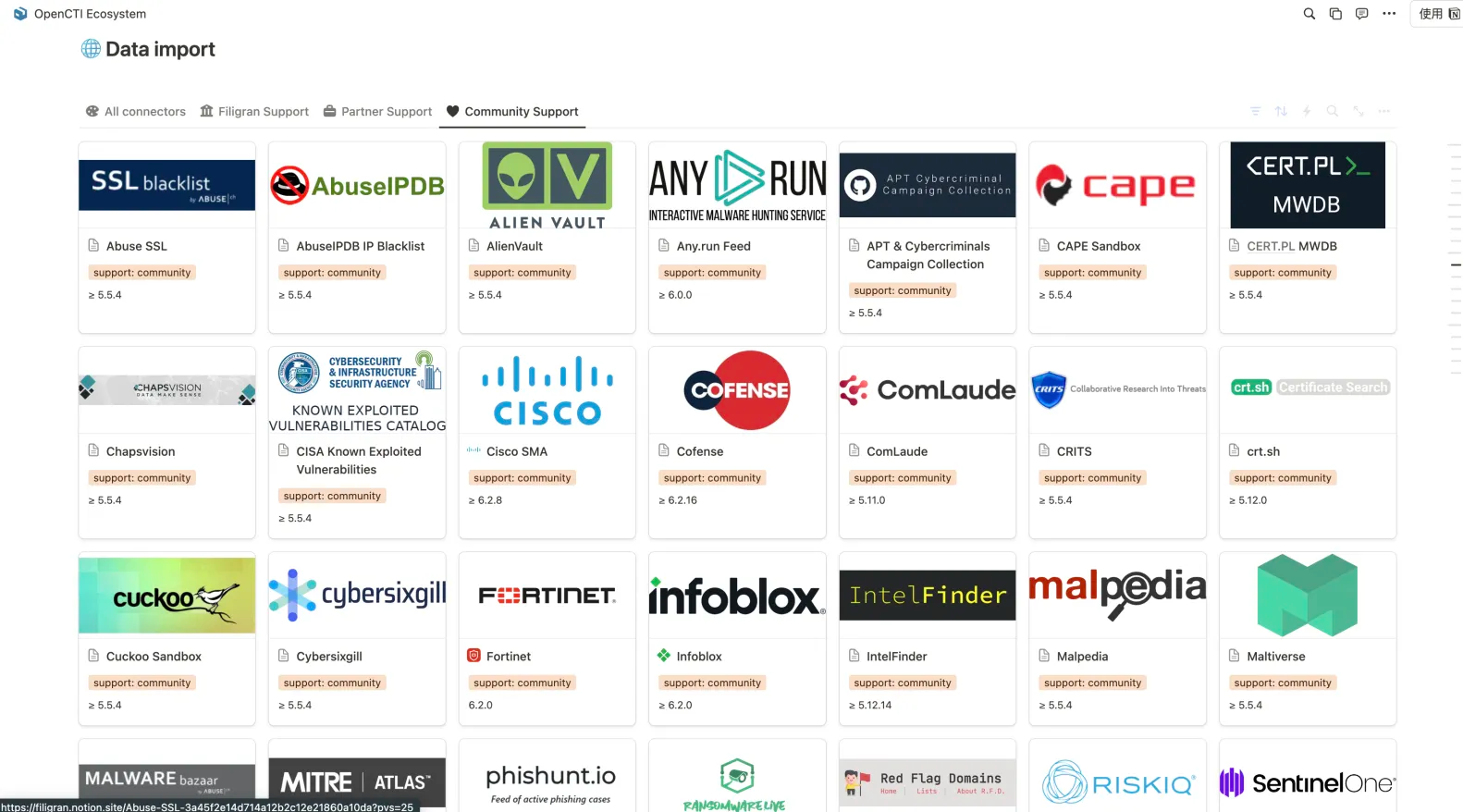

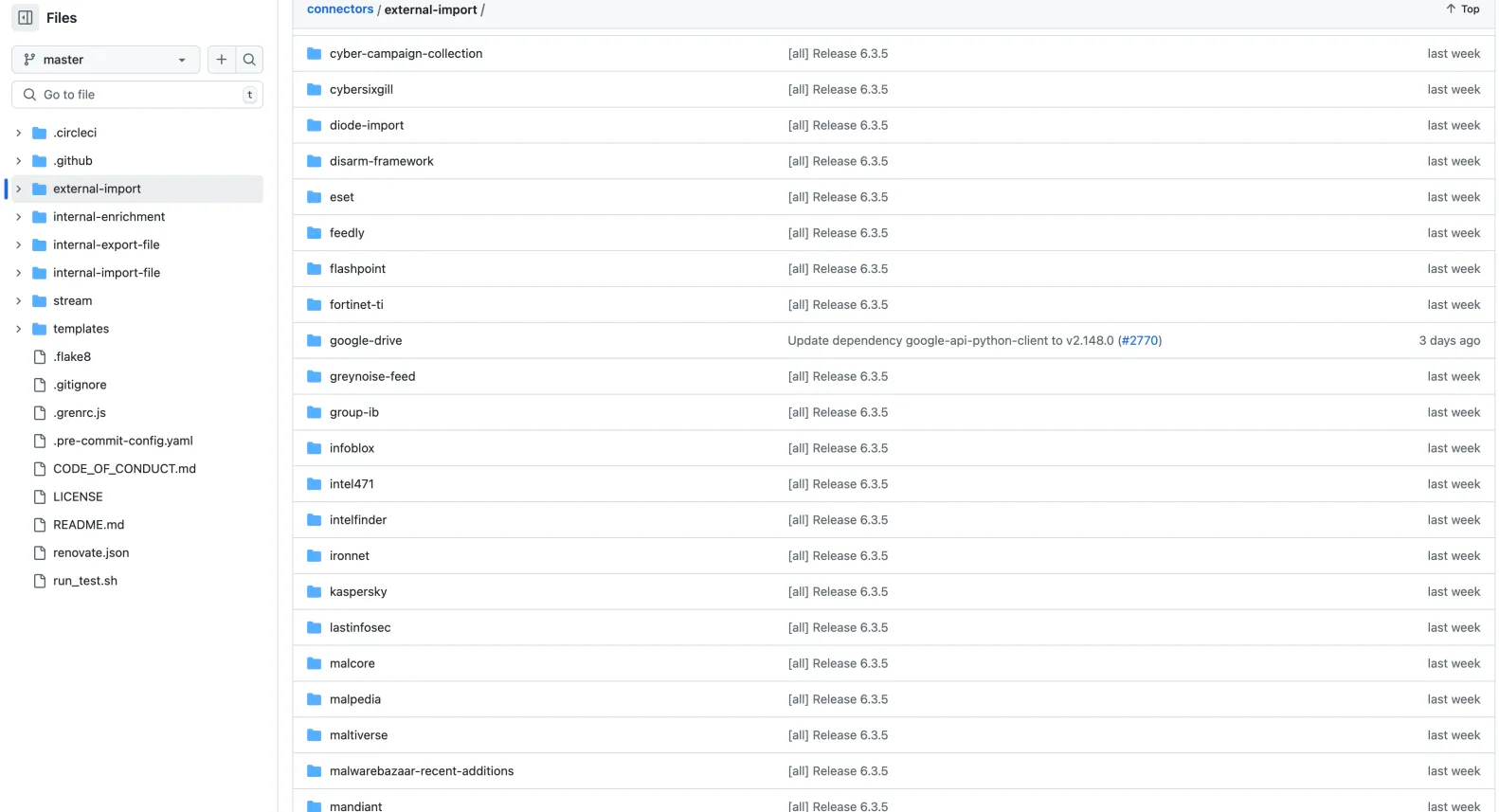

添加数据和连接器

从项目介绍里面我们看到,平台只是一个展示的作用

目前是没有数据的

所以需要引入外部数据

https://filigran.notion.site/OpenCTI-Ecosystem-868329e9fb734fca89692b2ed6087e76

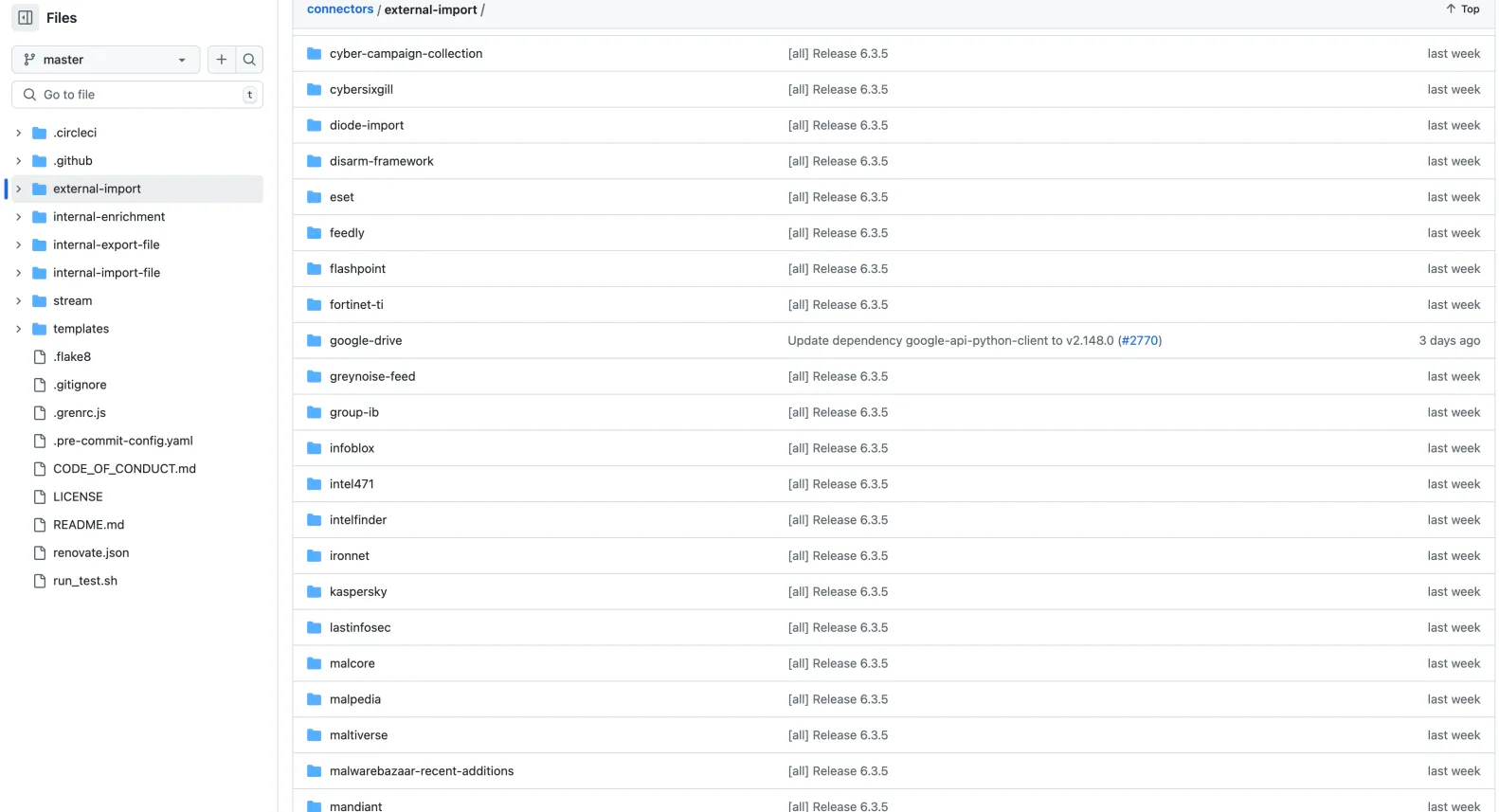

https://github.com/OpenCTI-Platform/connectors/tree/master/external-import

可以直接在官网社区查看相关的连接器

其实就是爬虫探针

这里我举几个例子

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

338

339

340

341

342

343

344

345

346

347

348

349

350

351

352

353

354

355

356

357

358

359

360

361

362

363

364

365

366

367

368

369

370

371

372

373

374

375

376

377

378

379

380

381

382

383

384

385

386

387

388

389

390

391

392

393

394

395

396

397

398

399

400

401

402

403

404

405

406

407

408

409

410

411

412

413

414

415

416

| services:

redis:

image: redis:7.4.0

restart: always

volumes:

- redisdata:/data

healthcheck:

test: ["CMD", "redis-cli", "ping"]

interval: 10s

timeout: 5s

retries: 3

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:8.15.2

volumes:

- esdata:/usr/share/elasticsearch/data

environment:

- discovery.type=single-node

- xpack.ml.enabled=false

- xpack.security.enabled=false

- thread_pool.search.queue_size=5000

- logger.org.elasticsearch.discovery="ERROR"

- "ES_JAVA_OPTS=-Xms${ELASTIC_MEMORY_SIZE} -Xmx${ELASTIC_MEMORY_SIZE}"

restart: always

ulimits:

memlock:

soft: -1

hard: -1

nofile:

soft: 65536

hard: 65536

healthcheck:

test: curl -s http://elasticsearch:9200 >/dev/null || exit 1

interval: 30s

timeout: 10s

retries: 50

minio:

image: minio/minio:RELEASE.2024-05-28T17-19-04Z

volumes:

- s3data:/data

ports:

- "9000:9000"

environment:

MINIO_ROOT_USER: ${MINIO_ROOT_USER}

MINIO_ROOT_PASSWORD: ${MINIO_ROOT_PASSWORD}

command: server /data

restart: always

healthcheck:

test: ["CMD", "mc", "ready", "local"]

interval: 10s

timeout: 5s

retries: 3

rabbitmq:

image: rabbitmq:3.13-management

environment:

- RABBITMQ_DEFAULT_USER=${RABBITMQ_DEFAULT_USER}

- RABBITMQ_DEFAULT_PASS=${RABBITMQ_DEFAULT_PASS}

- RABBITMQ_NODENAME=rabbit01@localhost

volumes:

- amqpdata:/var/lib/rabbitmq

restart: always

healthcheck:

test: rabbitmq-diagnostics -q ping

interval: 30s

timeout: 30s

retries: 3

opencti:

image: opencti/platform:6.3.5

environment:

- NODE_OPTIONS=--max-old-space-size=8096

- APP__PORT=8080

- APP__BASE_URL=${OPENCTI_BASE_URL}

- APP__ADMIN__EMAIL=${OPENCTI_ADMIN_EMAIL}

- APP__ADMIN__PASSWORD=${OPENCTI_ADMIN_PASSWORD}

- APP__ADMIN__TOKEN=${OPENCTI_ADMIN_TOKEN}

- APP__APP_LOGS__LOGS_LEVEL=error

- REDIS__HOSTNAME=redis

- REDIS__PORT=6379

- ELASTICSEARCH__URL=http://elasticsearch:9200

- MINIO__ENDPOINT=minio

- MINIO__PORT=9000

- MINIO__USE_SSL=false

- MINIO__ACCESS_KEY=${MINIO_ROOT_USER}

- MINIO__SECRET_KEY=${MINIO_ROOT_PASSWORD}

- RABBITMQ__HOSTNAME=rabbitmq

- RABBITMQ__PORT=5672

- RABBITMQ__PORT_MANAGEMENT=15672

- RABBITMQ__MANAGEMENT_SSL=false

- RABBITMQ__USERNAME=${RABBITMQ_DEFAULT_USER}

- RABBITMQ__PASSWORD=${RABBITMQ_DEFAULT_PASS}

- SMTP__HOSTNAME=${SMTP_HOSTNAME}

- SMTP__PORT=25

- PROVIDERS__LOCAL__STRATEGY=LocalStrategy

- APP__HEALTH_ACCESS_KEY=${OPENCTI_HEALTHCHECK_ACCESS_KEY}

ports:

- "8080:8080"

depends_on:

redis:

condition: service_healthy

elasticsearch:

condition: service_healthy

minio:

condition: service_healthy

rabbitmq:

condition: service_healthy

restart: always

healthcheck:

test: ["CMD", "wget", "-qO-", "http://xx.xx.xx.xx:8080/health?health_access_key=${OPENCTI_HEALTHCHECK_ACCESS_KEY}"]

interval: 10s

timeout: 5s

retries: 20

worker:

image: opencti/worker:6.3.5

environment:

- OPENCTI_URL=http://xx.xx.xx.xx:8080

- OPENCTI_TOKEN=${OPENCTI_ADMIN_TOKEN}

- WORKER_LOG_LEVEL=info

depends_on:

opencti:

condition: service_healthy

deploy:

mode: replicated

replicas: 6

restart: always

connector-export-file-stix:

image: opencti/connector-export-file-stix:6.3.5

environment:

- OPENCTI_URL=http://xx.xx.xx.xx:8080

- OPENCTI_TOKEN=${OPENCTI_ADMIN_TOKEN}

- CONNECTOR_ID=${CONNECTOR_EXPORT_FILE_STIX_ID}

- CONNECTOR_TYPE=INTERNAL_EXPORT_FILE

- CONNECTOR_NAME=ExportFileStix2

- CONNECTOR_SCOPE=application/json

- CONNECTOR_LOG_LEVEL=info

restart: always

depends_on:

opencti:

condition: service_healthy

connector-export-file-csv:

image: opencti/connector-export-file-csv:6.3.5

environment:

- OPENCTI_URL=http://xx.xx.xx.xx:8080

- OPENCTI_TOKEN=${OPENCTI_ADMIN_TOKEN}

- CONNECTOR_ID=${CONNECTOR_EXPORT_FILE_CSV_ID}

- CONNECTOR_TYPE=INTERNAL_EXPORT_FILE

- CONNECTOR_NAME=ExportFileCsv

- CONNECTOR_SCOPE=text/csv

- CONNECTOR_LOG_LEVEL=info

restart: always

depends_on:

opencti:

condition: service_healthy

connector-export-file-txt:

image: opencti/connector-export-file-txt:6.3.5

environment:

- OPENCTI_URL=http://xx.xx.xx.xx:8080

- OPENCTI_TOKEN=${OPENCTI_ADMIN_TOKEN}

- CONNECTOR_ID=${CONNECTOR_EXPORT_FILE_TXT_ID}

- CONNECTOR_TYPE=INTERNAL_EXPORT_FILE

- CONNECTOR_NAME=ExportFileTxt

- CONNECTOR_SCOPE=text/plain

- CONNECTOR_LOG_LEVEL=info

restart: always

depends_on:

opencti:

condition: service_healthy

connector-import-file-stix:

image: opencti/connector-import-file-stix:6.3.5

environment:

- OPENCTI_URL=http://xx.xx.xx.xx:8080

- OPENCTI_TOKEN=${OPENCTI_ADMIN_TOKEN}

- CONNECTOR_ID=${CONNECTOR_IMPORT_FILE_STIX_ID}

- CONNECTOR_TYPE=INTERNAL_IMPORT_FILE

- CONNECTOR_NAME=ImportFileStix

- CONNECTOR_VALIDATE_BEFORE_IMPORT=true

- CONNECTOR_SCOPE=application/json,text/xml

- CONNECTOR_AUTO=true

- CONNECTOR_LOG_LEVEL=info

restart: always

depends_on:

opencti:

condition: service_healthy

connector-import-document:

image: opencti/connector-import-document:6.3.5

environment:

- OPENCTI_URL=http://xx.xx.xx.xx:8080

- OPENCTI_TOKEN=${OPENCTI_ADMIN_TOKEN}

- CONNECTOR_ID=${CONNECTOR_IMPORT_DOCUMENT_ID}

- CONNECTOR_TYPE=INTERNAL_IMPORT_FILE

- CONNECTOR_NAME=ImportDocument

- CONNECTOR_VALIDATE_BEFORE_IMPORT=true

- CONNECTOR_SCOPE=application/pdf,text/plain,text/html

- CONNECTOR_AUTO=true

- CONNECTOR_ONLY_CONTEXTUAL=false

- CONNECTOR_CONFIDENCE_LEVEL=15

- CONNECTOR_LOG_LEVEL=info

- IMPORT_DOCUMENT_CREATE_INDICATOR=true

restart: always

depends_on:

opencti:

condition: service_healthy

connector-analysis:

image: opencti/connector-import-document:6.3.5

environment:

- OPENCTI_URL=http://xx.xx.xx.xx:8080

- OPENCTI_TOKEN=${OPENCTI_ADMIN_TOKEN}

- CONNECTOR_ID=${CONNECTOR_ANALYSIS_ID}

- CONNECTOR_TYPE=INTERNAL_ANALYSIS

- CONNECTOR_NAME=ImportDocumentAnalysis

- CONNECTOR_VALIDATE_BEFORE_IMPORT=false

- CONNECTOR_SCOPE=application/pdf,text/plain,text/html

- CONNECTOR_AUTO=true

- CONNECTOR_ONLY_CONTEXTUAL=false

- CONNECTOR_CONFIDENCE_LEVEL=15

- CONNECTOR_LOG_LEVEL=info

restart: always

depends_on:

opencti:

condition: service_healthy

connector-cisa-known-exploited-vulnerabilities:

image: opencti/connector-cisa-known-exploited-vulnerabilities:6.3.5

environment:

- OPENCTI_URL=http://xx.xx.xx.xx:8080

- OPENCTI_TOKEN=${OPENCTI_ADMIN_TOKEN}

- CONNECTOR_ID=${CONNECTOR_CISAKEV_ID}

- "CONNECTOR_NAME=CISA Known Exploited Vulnerabilities"

- CONNECTOR_SCOPE=cisa

- CONNECTOR_RUN_AND_TERMINATE=false

- CONNECTOR_LOG_LEVEL=error

- CONNECTOR_DURATION_PERIOD=P2D

- CISA_CATALOG_URL=https://www.cisa.gov/sites/default/files/feeds/known_exploited_vulnerabilities.json

- CISA_CREATE_INFRASTRUCTURES=false

- CISA_TLP=TLP:CLEAR

- OPENCTI_JSON_LOGGING=true

- CONNECTOR_LOG_LEVEL=info

restart: always

depends_on:

opencti:

condition: service_healthy

connector-alienvault:

image: opencti/connector-alienvault:6.3.5

environment:

- OPENCTI_URL=http://xx.xx.xx.xx:8080

- OPENCTI_TOKEN=${OPENCTI_ADMIN_TOKEN}

- CONNECTOR_ID=${CONNECTOR_ALIENVAULT_ID}

- CONNECTOR_TYPE=EXTERNAL_IMPORT

- CONNECTOR_NAME=AlienVault

- CONNECTOR_SCOPE=alienvault

- CONNECTOR_CONFIDENCE_LEVEL=15

- CONNECTOR_UPDATE_EXISTING_DATA=false

- CONNECTOR_LOG_LEVEL=info

- ALIENVAULT_BASE_URL=https://otx.alienvault.com

- ALIENVAULT_API_KEY=*****62aac301394ac5c91202b0b408c

- ALIENVAULT_CREATE_OBSERVABLES=true

- ALIENVAULT_CREATE_INDICATORS=true

- ALIENVAULT_PULSE_START_TIMESTAMP=2020-05-01T00:00:00

- ALIENVAULT_REPORT_TYPE=threat-report

- ALIENVAULT_REPORT_STATUS=New

- ALIENVAULT_GUESS_MALWARE=false

- ALIENVAULT_GUESS_CVE=false

- ALIENVAULT_EXCLUDED_PULSE_INDICATOR_TYPES=FileHash-MD5,FileHash-SHA1

- ALIENVAULT_ENABLE_RELATIONSHIPS=true

- ALIENVAULT_ENABLE_ATTACK_PATTERNS_INDICATES=true

- ALIENVAULT_INTERVAL_SEC=3600

restart: always

depends_on:

opencti:

condition: service_healthy

connector-ransomware:

image: opencti/connector-ransomwarelive:6.3.5

container_name: ransomware-connector

environment:

- CONNECTOR_NAME=Ransomware Connector

- CONNECTOR_SCOPE=identity,attack-pattern,course-of-action,intrusion-set,malware,tool,report

- OPENCTI_URL=http://xx.xx.xx.xx:8080

- OPENCTI_TOKEN=${OPENCTI_ADMIN_TOKEN}

- CONNECTOR_ID=${CONNECTOR_RANSOMWARE_ID}

- CONNECTOR_CONFIDENCE_LEVEL=100

- CONNECTOR_LOG_LEVEL=info

- CONNECTOR_UPDATE_EXISTING_DATA=false

- CONNECTOR_PULL_HISTORY=true

- CONNECTOR_HISTORY_START_YEAR=2024

- CONNECTOR_RUN_EVERY=5m

restart: always

depends_on:

opencti:

condition: service_healthy

connector-cve:

image: opencti/connector-cve:6.3.5

environment:

- OPENCTI_URL=http://xx.xx.xx.xx:8080

- OPENCTI_TOKEN=${OPENCTI_ADMIN_TOKEN}

- CONNECTOR_ID=*************448-d7274d2b99a2

- CONNECTOR_NAME=Common Vulnerabilities and Exposures

- CONNECTOR_SCOPE=identity,vulnerability

- CONNECTOR_RUN_AND_TERMINATE=false

- CONNECTOR_LOG_LEVEL=error

- CVE_BASE_URL=https://services.nvd.nist.gov/rest/json/cves/2.0

- CVE_API_KEY=813ad80f-ec9a-40b4-a991-7377bb49fcad

- CVE_INTERVAL=2

- CVE_MAX_DATE_RANGE=120

- CVE_MAINTAIN_DATA=true

- CVE_PULL_HISTORY=false

- CVE_HISTORY_START_YEAR=2019

restart: always

connector-redflag-domains:

image: opencti/connector-red-flag-domains:6.3.5

environment:

- OPENCTI_URL=http://xx.xx.xx.xx:8080

- OPENCTI_TOKEN=${OPENCTI_ADMIN_TOKEN}

- CONNECTOR_ID=*************9-8ab7-1e7e550a9282

- "CONNECTOR_NAME=Red Flag Domains"

- CONNECTOR_SCOPE=red-flag-domains

- CONNECTOR_CONFIDENCE_LEVEL=70

- UPDATE_EXISTING_DATA=true

- CONNECTOR_LOG_LEVEL=info

- REDFLAGDOMAINS_URL=https://dl.red.flag.domains/daily/

restart: always

connector-urlscan:

image: opencti/connector-urlscan:6.3.5

environment:

- OPENCTI_URL=http://xx.xx.xx.xx:8080

- OPENCTI_TOKEN=${OPENCTI_ADMIN_TOKEN}

- CONNECTOR_ID=*************5b7-bcc8-7fcccfd7be8d

- CONNECTOR_NAME=Urlscan

- CONNECTOR_SCOPE=urlscan

- CONNECTOR_LOG_LEVEL=error

- CONNECTOR_CONFIDENCE_LEVEL=40

- CONNECTOR_CREATE_INDICATORS=true

- CONNECTOR_TLP=white

- CONNECTOR_LABELS=Phishing,Phishfeed

- CONNECTOR_INTERVAL=86400

- CONNECTOR_LOOKBACK=3

- URLSCAN_URL=https://urlscan.io/api/v1/pro/phishfeed?format=json

- URLSCAN_API_KEY=c9f3f2c2-0330-401e-8a69-5563178dc781

- URLSCAN_DEFAULT_X_OPENCTI_SCORE=50

restart: always

connector-tweetfeed:

image: opencti/connector-tweetfeed:6.3.5

environment:

- OPENCTI_URL=http://xx.xx.xx.xx:8080

- OPENCTI_TOKEN=${OPENCTI_ADMIN_TOKEN}

- CONNECTOR_ID=*************b417-d5608d8fb407

- CONNECTOR_NAME=Tweetfeed

- CONNECTOR_SCOPE=tweetfeed

- CONNECTOR_CONFIDENCE_LEVEL=15

- CONNECTOR_LOG_LEVEL=error

- TWEETFEED_CONFIDENCE_LEVEL=15

- TWEETFEED_CREATE_INDICATORS=true

- TWEETFEED_CREATE_OBSERVABLES=true

- TWEETFEED_INTERVAL=1

- TWEETFEED_UPDATE_EXISTING_DATA=true

- "TWEETFEED_ORG_DESCRIPTION=Tweetfeed, a connector to import IOC from Twitter."

- TWEETFEED_ORG_NAME=Tweetfeed

- TWEETFEED_DAYS_BACK_IN_TIME=30

restart: always

connector-vxvault:

image: opencti/connector-vxvault:6.3.5

environment:

- OPENCTI_URL=http://xx.xx.xx.xx:8080

- OPENCTI_TOKEN=${OPENCTI_ADMIN_TOKEN}

- CONNECTOR_ID=*************8bcd-e65b8daccd2b

- "CONNECTOR_NAME=VX Vault URL list"

- CONNECTOR_SCOPE=vxvault

- CONNECTOR_LOG_LEVEL=error

- VXVAULT_URL=https://vxvault.net/URL_List.php

- VXVAULT_CREATE_INDICATORS=true

- VXVAULT_INTERVAL=3

- VXVAULT_SSL_VERIFY=False

restart: always

connector-urlhaus-recent-payloads:

image: opencti/connector-urlhaus-recent-payloads:6.3.5

environment:

- OPENCTI_URL=http://xx.xx.xx.xx:8080

- OPENCTI_TOKEN=${OPENCTI_ADMIN_TOKEN}

- CONNECTOR_ID=*************a943-b27220d0e1a1

- "CONNECTOR_NAME=URLhaus Recent Payloads"

- CONNECTOR_CONFIDENCE_LEVEL=50

- CONNECTOR_LOG_LEVEL=error

- URLHAUS_RECENT_PAYLOADS_API_URL=https://urlhaus-api.abuse.ch/v1/

- URLHAUS_RECENT_PAYLOADS_COOLDOWN_SECONDS=300

- URLHAUS_RECENT_PAYLOADS_INCLUDE_FILETYPES=exe,dll,docm,docx,doc,xls,xlsx,xlsm,js,xll

- URLHAUS_RECENT_PAYLOADS_INCLUDE_SIGNATURES=

- URLHAUS_RECENT_PAYLOADS_SKIP_UNKNOWN_FILETYPES=true

- URLHAUS_RECENT_PAYLOADS_SKIP_NULL_SIGNATURE=true

- URLHAUS_RECENT_PAYLOADS_LABELS=urlhaus

- URLHAUS_RECENT_PAYLOADS_LABELS_COLOR=#54483b

- URLHAUS_RECENT_PAYLOADS_SIGNATURE_LABEL_COLOR=#0059f7

- URLHAUS_RECENT_PAYLOADS_FILETYPE_LABEL_COLOR=#54483b

restart: always

connector-cyber-campaign-collection:

image: opencti/connector-cyber-campaign-collection:6.3.5

environment:

- OPENCTI_URL=http://xx.xx.xx.xx:8080

- OPENCTI_TOKEN=${OPENCTI_ADMIN_TOKEN}

- CONNECTOR_ID=*************87-ac9e107b772f

- "CONNECTOR_NAME=APT & Cybercriminals Campaign Collection"

- CONNECTOR_SCOPE=report

- CONNECTOR_RUN_AND_TERMINATE=false

- CONNECTOR_LOG_LEVEL=error

- CYBER_MONITOR_GITHUB_TOKEN=

- CYBER_MONITOR_FROM_YEAR=2018

- CYBER_MONITOR_INTERVAL=4

restart: always

volumes:

esdata:

s3data:

redisdata:

amqpdata:

|

大家直接抄作业,然后记得修改uid和apikey

然后加完后,更新工程